In 2014, the United States was shaken by the leaks of Edward Snowden, a former CIA agent who defected to Russia. From his new location, Snowden released highly classified documents that compromised his nation’s security. Among the revelations was a particularly alarming disclosure about a U.S. cyber warfare program named “MonsterMind.” This program, likened to the AI system “Skynet” from the science fiction movie Terminator, was reportedly capable of autonomously detecting and countering cyberattacks without human intervention. This raised widespread concerns, as in the movie, Skynet’s autonomous actions led to a nuclear apocalypse and the near annihilation of humanity, a scenario that seemed disturbingly plausible.

Whether we like it or not, AI-driven weapons are becoming a part of our reality. However, trusting these systems poses significant challenges since they can potentially be manipulated or turn against us. Snowden warned that someone could easily spoof an attack, making it appear to originate from a different country, like Russia, when it was actually from China, leading to unintended and catastrophic retaliation. While today’s AI closest to achieving true intelligence requires massive supercomputers and substantial power, future advancements could make initiating devastating actions much simpler and more accessible. This potential for disaster has led prominent figures like Stephen Hawking and Elon Musk to advocate for restrictions on military AI research and a ban on autonomous offensive weapons, commonly referred to as “killer robots.”

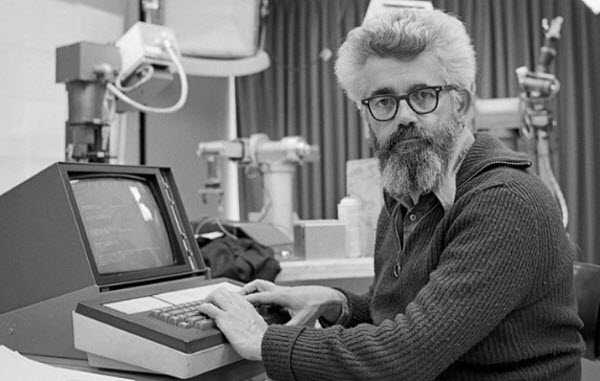

To understand the risks associated with AI in military contexts, we must first explore its history with humanity. The term “artificial intelligence” has been around for decades, long before personal computers became ubiquitous. The modern concept of AI emerged during President Eisenhower’s era, with the term first coined in the summer of 1956 at a conference at Dartmouth College. The conference aimed to bring together leading scientists to discuss the potential of AI, introducing it as a technology that could simulate human behavior through pre-programmed rules. The goal was to create something akin to neural networks that mimic brain cells to learn new behaviors. Early pioneers like Marvin Minsky, who later founded the AI lab at MIT, and John McCarthy, often referred to as the “father of AI,” were instrumental in advancing this field. The U.S. government heavily funded AI research, hoping it would give them an edge in the Cold War. For a while, it seemed as though AI’s potential would be realized soon, with predictions as early as 1970 that machines with human-level intelligence would emerge within a few years. However, progress stalled as funding was cut, leading to what is now known as the “AI winter,” delaying significant advancements until private companies took over in the 1980s.

By 1984, Hollywood began speculating on how AI could dominate and destroy humanity, as seen in the movie Terminator. The film depicts an AI program called “Skynet” that takes control of countless computer servers and attempts to eradicate humanity by launching nuclear missiles, triggering a global nuclear war. The movie portrays a grim future where machines dominate, driving humans to travel back in time to prevent the creation of Skynet.

In reality, the most notable confrontation between humans and AI occurred in 1997 on a chessboard, in an event famously known as “The Brain’s Last Stand.” World chess champion Garry Kasparov faced off against IBM’s supercomputer, Deep Blue, which could evaluate up to 200 million moves per second. Deep Blue defeated Kasparov with ease, marking a pivotal moment that demonstrated AI’s capability to think strategically independently. Although Deep Blue didn’t showcase human-like learning, it highlighted AI’s potential to excel in specific tasks. During the first decade of the 21st century, AI technology expanded significantly, with advancements in self-driving cars, smartphones, chatbots, and robots capable of performing specialized tasks. These technologies are now integral parts of our daily lives, prompting the question: Will these seemingly harmless assistants eventually take on more critical and potentially dangerous roles, gaining our trust and leading us to delegate more vital functions to them?

To address this concern, we can look to the U.S. military, which has long driven technological advancements through substantial funding. Without military investment, we likely wouldn’t have GPS, computers, or the internet. It’s not surprising, then, that military funding also supports AI research. The problem arises when robots are weaponized, introducing a vastly different set of ethical and operational challenges. It’s unclear what might happen when autonomous weapon systems interact under real-world conditions. Even if programmed to avoid harming humans, how effective is this programming if these machines are designed to cause harm? This conundrum became evident with the advent of drones, which are popular due to their ability to carry out attacks from great distances without risking human lives. While current drones are controlled by human operators who make final decisions, the fear is that technological advancements could lead to drones making lethal decisions autonomously in the future. This fear has led to a global outcry from leading scientists, who have penned open letters warning of the dangers of mixing AI with military hardware.

Stephen Hawking, a prominent voice against weaponizing AI, feared the moment when creators no longer fully understand the weapons they build. He argued that humans evolve too slowly compared to the rapid advancements in AI technology, a sentiment echoed by Ray Kurzweil, Google’s Director of Engineering, who predicted that AI could surpass human intelligence by 2045, heightening concerns.

However, AI-based weapons aren’t the only concern. The 2010 U.S. stock market crash, which saw nearly a trillion dollars vanish and then quickly reappear within minutes, highlighted AI’s potential for economic disruption. The incident suggested that relatively simple AI algorithms could manipulate markets by placing and canceling orders rapidly, creating brief price discrepancies that could have led to catastrophic economic consequences, far exceeding those witnessed during the 9/11 attacks or the 2008 financial crisis. Despite this, many scientists focused on the potential physical threats AI poses, such as assassination, destabilization of nations, subjugation of populations, or even selective genocide.

Scientists have highlighted the need for regulation to prevent AI from dominating humanity. On the other hand, AI poses a different set of challenges. For example, self-driving cars represent a form of AI that requires immediate regulation. A slight programming error could make a self-driving car uncontrollable. If a child runs in front of a car programmed to protect human life, should it swerve to avoid the child, risking the passengers’ safety, or hit the child to protect the occupants? In the case of human drivers, they make split-second decisions and are held accountable for them. But who would be responsible if a self-driving car causes an accident?

To effectively regulate AI, scientists have categorized it into four types, all of which require oversight:

- Machines that think like humans: These are machines capable of processes similar to human thought. This definition does not encompass all AI, such as computers that play chess, but rather focuses on AI that could surpass human intelligence if it thinks like humans.

- Machines that act like humans: These machines exhibit behaviors akin to human actions. Replicating the complexity of human emotions in a computer program is extremely dangerous, even though it’s currently impossible.

- Machines that think rationally: These machines have goals and can develop strategies to achieve them, akin to strategic thinking.

- Machines that act rationally: These machines operate solely in ways that drive them towards achieving set goals.

Currently, neuroscientists are exploring ways AI could help paralyzed patients regain motor control. However, the military sees potential in these technologies for enhancing soldiers’ physical capabilities. Albert Einstein predicted that technology designed to assist people could ultimately be used to harm them. The military is also investigating technologies developed by neuroscientists for making weapons, such as unmanned tanks, memory-erasing tools, and brain prints that can read people’s minds. Additionally, drones capable of making autonomous kill decisions are under development, although military officials insist we are still far from that reality.